- 1. 2022-機(jī)器學(xué)習(xí)相關(guān)規(guī)定

- 2. 2021-機(jī)器學(xué)習(xí)相關(guān)規(guī)定

- 3. 第一節(jié) 2021 - (上) - 機(jī)器學(xué)習(xí)基本概念簡(jiǎn)介

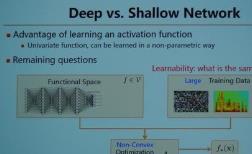

- 4. 2021 - (下) - 深度學(xué)習(xí)基本概念簡(jiǎn)介

- 5. 2022-Colab教學(xué)

- 6. 2022-PyTorch Tutorial 1

- 7. 2022-PyTorch Tutorial 2

- 8. 2021-Google Colab教學(xué)

- 9. 2021-Pytorch 教學(xué) part 1

- 10. 2021-Pytorch 教學(xué) part 2(英文有字幕)

- 11. 2022-作業(yè)HW1

- 12. 2021-作業(yè)HW1

- 13. (選修)To Learn More - 深度學(xué)習(xí)簡(jiǎn)介

- 14. (選修)To Learn More - 反向傳播(Backpropagation)

- 15. (選修)To Learn More - 預(yù)測(cè)神奇寶貝Pokemon

- 16. (選修)To Learn More - 分類(lèi)神奇寶貝Pokemon

- 17. (選修)To Learn More - 邏輯回歸

- 18. 第二節(jié) 2021 - 機(jī)器學(xué)習(xí)任務(wù)攻略

- 19. 2021 - 類(lèi)神經(jīng)網(wǎng)絡(luò)訓(xùn)練不起來(lái)怎么辦(一) 局部最小值 (local minima) 與鞍點(diǎn) (saddle point)

- 20. 2021 - 類(lèi)神經(jīng)網(wǎng)絡(luò)訓(xùn)練不起來(lái)怎么辦(二) 批次 (batch) 與動(dòng)量 (momentum)

- 21. 2021 - 類(lèi)神經(jīng)網(wǎng)絡(luò)訓(xùn)練不起來(lái)怎么辦(三) 自動(dòng)調(diào)整學(xué)習(xí)率 (Learning Rate)

- 22. 2021 - 類(lèi)神經(jīng)網(wǎng)絡(luò)訓(xùn)練不起來(lái)怎么辦(四) 損失函數(shù) (Loss) 也可能有影響

- 23. 2022 - 再探寶可夢(mèng)、數(shù)碼寶貝分類(lèi)器 — 淺談機(jī)器學(xué)習(xí)原理

- 24. (選修)To Learn More - Gradient Descent 樣例1

- 25. (選修)To Learn More - Gradient Descent 樣例2

- 26. (選修)To Learn More - Optimization for Deep Learning (1_2)

- 27. (選修)To Learn More - Optimization for Deep Learning (2_2)

- 28. 2022 - 作業(yè)HW2

- 29. 2021 - 作業(yè)說(shuō)明 HW2中文低畫(huà)質(zhì)版

- 30. 2021 - 作業(yè)說(shuō)明 HW2-英文有字幕高清版

- 31. 第三節(jié) 2021 - 卷積神經(jīng)網(wǎng)絡(luò)(CNN)

- 32. 2022 - 為什么用了驗(yàn)證集 (validation set) 結(jié)果卻還是過(guò)擬合(overfitting)了呢?

- 33. 2022 - 魚(yú)與熊掌可以兼得的機(jī)器學(xué)習(xí)

- 34. (選修)To Learn More - Spatial Transformer Layer

- 35. 2022 - 作業(yè)說(shuō)明HW3

- 36. 2021- 作業(yè)說(shuō)明HW3 中文低畫(huà)質(zhì)

- 37. 2021- 作業(yè)說(shuō)明HW3 英文高畫(huà)質(zhì)有字幕

- 38. 第四節(jié) 2021 - 自注意力機(jī)制(Self-attention)(上)

- 39. 2021 - 自注意力機(jī)制 (Self-attention) (下)

- 40. (選修)To Learn More - Recurrent Neural Network (Part I)

- 41. (選修)To Learn More - Recurrent Neural Network (Part II)

- 42. (選修)To Learn More - Graph Neural Network(1_2)

- 43. (選修)To Learn More - Graph Neural Network(2_2)

- 44. (選修)To Learn More - Unsupervised Learning - Word Embedding

- 45. 2022 - 作業(yè)說(shuō)明HW4

- 46. 2021 - 作業(yè)說(shuō)明 HW4-中文低畫(huà)質(zhì)版

- 47. 2021 - 作業(yè)說(shuō)明 HW4-英文無(wú)字幕高清版

- 48. 第五節(jié) 2021 - 類(lèi)神經(jīng)網(wǎng)絡(luò)訓(xùn)練不起來(lái)怎么辦 (五) 批次標(biāo)準(zhǔn)化 (Batch Normalization)

- 49. 2021 - Transformer (上)

- 50. 2021 - Transformer (下)

- 51. 2022 - 各式各樣神奇的自注意力機(jī)制 (Self-attention) 變型

- 52. (選修)To Learn More - Non-Autoregressive Sequence Generation

- 53. (選修)To Learn More - Pointer Network

- 54. 2022 - 作業(yè)說(shuō)明HW5

- 55. 2021 - 作業(yè)說(shuō)明 HW5 中文 + Judgeboi講解

- 56. 2021 - 作業(yè)說(shuō)明 HW5 slides tutorial -英文版機(jī)翻

- 57. 2021 - 作業(yè)說(shuō)明 HW5 code tutorial -英文版機(jī)翻

- 58. 第六節(jié) 2021 - 生成式對(duì)抗網(wǎng)絡(luò)(GAN) (一) – 基本概念介紹

- 59. 2021 - 生成式對(duì)抗網(wǎng)絡(luò)(GAN) (二) – 理論介紹與WGAN

- 60. 2021 - 生成式對(duì)抗網(wǎng)絡(luò)(GAN) (三) – 生成器效能評(píng)估與條件式生成

- 61. 2021 - 生成式對(duì)抗網(wǎng)絡(luò)(GAN) (四) – Cycle GAN

- 62. (選修)To Learn More - GAN Basic Theory

- 63. (選修)To Learn More - General Framework

- 64. (選修)To Learn More - WGAN EBGAN

- 65. (選修)To Learn More - Unsupervised Learning - Deep Generative Model (Part I)

- 66. (選修)To Learn More - Unsupervised Learning - Deep Generative Model (Part II)

- 67. (選修)To Learn More - Flow-based Generative Model

- 68. 2021 - 作業(yè)說(shuō)明 HW6 中文版低畫(huà)質(zhì)

- 69. 2021 - 作業(yè)說(shuō)明 HW6 英文版高畫(huà)質(zhì)有字幕

- 70. 2022 - 作業(yè)說(shuō)明 HW6

- 71. 第七節(jié) 2021 - 自監(jiān)督式學(xué)習(xí) (一) – 芝麻街與進(jìn)擊的巨人

- 72. 2021 - 自監(jiān)督式學(xué)習(xí) (二) – BERT簡(jiǎn)介

- 73. 2021 - 自監(jiān)督式學(xué)習(xí) (三) – BERT的奇聞?shì)W事

- 74. 2021 - 自監(jiān)督式學(xué)習(xí) (四) – GPT的野望

- 75. 2022 - 如何有效的使用自督導(dǎo)式模型 - Data-Efficient & Parameter-Efficient Tuning

- 76. 2022 - 語(yǔ)音與影像上的神奇自督導(dǎo)式學(xué)習(xí)模型

- 77. (選修)To Learn More - BERT and its family - Introduction and Fine-tune

- 78. (選修)To Learn More - ELMo BERT GPT XLNet MASS BART UniLM ELECTRA othe

- 79. (選修)To Learn More - Multilingual BERT

- 80. (選修)To Learn More - 來(lái)自獵人暗黑大陸的模型 GPT-3

- 81. 2021 - 作業(yè)說(shuō)明 HW7 中文版低畫(huà)質(zhì)

- 82. 2022 - Homework 7

- 83. 第八節(jié) 2021 - 自編碼器 (Auto-encoder) (上) – 基本概念

- 84. 2021 - 自編碼器 (Auto-encoder) (下) – 領(lǐng)結(jié)變聲器與更多應(yīng)用

- 85. 2021 - Anomaly Detection (1_7)

- 86. 2021 - Anomaly Detection (2_7)

- 87. 2021 - Anomaly Detection (3_7)

- 88. 2021 - Anomaly Detection (4_7)

- 89. 2021 - Anomaly Detection (5_7)

- 90. 2021 - Anomaly Detection (6_7)

- 91. 2021 - Anomaly Detection (7_7)

- 92. (選修)To Learn More - Unsupervised Learning - Linear Methods

- 93. (選修)To Learn More - Unsupervised Learning - Neighbor Embedding

- 94. 2021 - 作業(yè)說(shuō)明 HW8 中文版低畫(huà)質(zhì)

- 95. 2022 - 作業(yè)說(shuō)明 Homework 8

- 96. 第九節(jié) 2021 - 機(jī)器學(xué)習(xí)的可解釋性 (上) – 為什么神經(jīng)網(wǎng)絡(luò)可以正確分辨寶可夢(mèng)和數(shù)碼寶貝

- 97. 2021 - 機(jī)器學(xué)習(xí)的可解釋性 (下) –機(jī)器心中的貓長(zhǎng)什么樣子

- 98. 2022 - 自然語(yǔ)言處理上的對(duì)抗式攻擊 (由姜成翰助教講授) - Part 1

- 99. 2021 - 作業(yè)說(shuō)明 HW9 中文版低畫(huà)質(zhì)

- 100. 2022 - Homework 9

- 101. 第十節(jié) 2021 - 來(lái)自人類(lèi)的惡意攻擊 (Adversarial Attack) (上) – 基本概念

- 102. 2021 - 來(lái)自人類(lèi)的惡意攻擊 (Adversarial Attack) (下) – 類(lèi)神經(jīng)網(wǎng)絡(luò)能否躲過(guò)人類(lèi)深不見(jiàn)底的惡意

- 103. 2022 - 自然語(yǔ)言處理上的對(duì)抗式攻擊 (由姜成翰助教講授) - Part 2

- 104. 2022 - 自然語(yǔ)言處理上的對(duì)抗式攻擊 (由姜成翰助教講授) - Part 3

- 105. 2022 - 自然語(yǔ)言處理上的模仿攻擊 (Imitation Attack) 以及后門(mén)攻擊 (Backdoor Attack) (由姜成翰助教講授)

- 106. (選修)To Learn More - More about Adversarial Attack (1_2)

- 107. (選修)To Learn More - More about Adversarial Attack (2_2)

- 108. 2021 - 作業(yè)說(shuō)明 HW10 中文版低畫(huà)質(zhì)

- 109. 2022 - Homework 10

- 110. 第十一節(jié) 2021 - 概述領(lǐng)域自適應(yīng) (Domain Adaptation)

- 111. 2022 - 惡搞自督導(dǎo)式學(xué)習(xí)模型 BERT的三個(gè)故事

- 112. 2021 - 作業(yè)說(shuō)明 HW11 Domain Adaptation

- 113. 2022 - 作業(yè)說(shuō)明 Homework11

- 114. 第十二節(jié) 2021 - 概述增強(qiáng)式學(xué)習(xí)(一) – 增強(qiáng)式學(xué)習(xí)和機(jī)器學(xué)習(xí)一樣都是三個(gè)步驟

- 115. 2021 - 概述增強(qiáng)式學(xué)習(xí) (二) – Policy Gradient 與修課心情

- 116. 2021 - 概述增強(qiáng)式學(xué)習(xí) (三) – Actor-Critic

- 117. 2021 - 概述增強(qiáng)式學(xué)習(xí) (四) – 回饋非常罕見(jiàn)的時(shí)候怎么辦?機(jī)器的望梅止渴

- 118. 2021 - 概述增強(qiáng)式學(xué)習(xí) (五) – 如何從示范中學(xué)習(xí)?逆向增強(qiáng)式學(xué)習(xí) (Inverse RL)

- 119. 2021 - 作業(yè)說(shuō)明 HW12 中文高清

- 120. 2022 - 作業(yè)說(shuō)明 HW12 (英文版) - 1_2

- 121. (選修)To Learn More - Deep Reinforcement Learning

- 122. 第十三節(jié) 2021 - 神經(jīng)網(wǎng)絡(luò)壓縮 (一) - 類(lèi)神經(jīng)網(wǎng)絡(luò)剪枝(Pruning) 與大樂(lè)透假說(shuō)(Lottery Ticket Hypothesis)

- 123. 2021 - 神經(jīng)網(wǎng)絡(luò)壓縮 (二) - 從各種不同的面向來(lái)壓縮神經(jīng)網(wǎng)絡(luò)

- 124. (選修)To Learn More - Proximal Policy Optimization (PPO)

- 125. (選修)To Learn More - Q-learning (Basic Idea)

- 126. (選修)To Learn More - Q-learning (Advanced Tips)

- 127. (選修)To Learn More - Q-learning (Continuous Action)

- 128. (選修)To Learn More - Geometry of Loss Surfaces (Conjecture)

- 129. 2021 - 作業(yè)說(shuō)明 HW13 中文高清

- 130. 2022 - 作業(yè)說(shuō)明 HW13

- 131. 第十四節(jié) 2021 - 機(jī)器終身學(xué)習(xí) (一) - 為什么今日的人工智能無(wú)法成為天網(wǎng)?災(zāi)難性遺忘(Catastrophic Forgetting)

- 132. 2021 - 機(jī)器終身學(xué)習(xí) (二) - 災(zāi)難性遺忘(Catastrophic Forgetting)

- 133. 2021 - 作業(yè)說(shuō)明 HW14 中文高清

- 134. 2022 - 作業(yè)說(shuō)明 HW14

- 135. 第十五節(jié) 2021 - 元學(xué)習(xí) Meta Learning (一) - 元學(xué)習(xí)和機(jī)器學(xué)習(xí)一樣也是三個(gè)步驟

- 136. 2021 - 元學(xué)習(xí) Meta Learning (二) - 萬(wàn)物皆可 Meta

- 137. 2022 - 各種奇葩的元學(xué)習(xí) (Meta Learning) 用法

- 138. (選修)To Learn More - Meta Learning – MAML (1)

- 139. (選修)To Learn More - Meta Learning – MAML (2)

- 140. (選修)To Learn More - Meta Learning – MAML (3)

- 141. (選修)To Learn More - Meta Learning – MAML (4)

- 142. (選修)To Learn More - Meta Learning – MAML (5)

- 143. (選修)To Learn More - Meta Learning – MAML (6)

- 144. (選修)To Learn More - Meta Learning – MAML (7)

- 145. (選修)To Learn More - Meta Learning – MAML (8)

- 146. (選修)To Learn More - Meta Learning – MAML (9)

- 147. (選修)To Learn More - Gradient Descent as LSTM (1_3)

- 148. (選修)To Learn More - Gradient Descent as LSTM (2_3)

- 149. (選修)To Learn More - Gradient Descent as LSTM (3_3)

- 150. (選修)To Learn More - Meta Learning – Metric-based (1)

- 151. (選修)To Learn More - Meta Learning – Metric-based (2)

- 152. (選修)To Learn More - Meta Learning – Metric-based (3)

- 153. (選修)To Learn More - Meta Learning - Train+Test as RNN

- 154. 2022 - 作業(yè)說(shuō)明 HW15

- 155. 【機(jī)器學(xué)習(xí)】課程結(jié)語(yǔ) 完結(jié)撒花

Lecture 02019/02/19Course Logistics [slides]

Registration: [Google Form]

Lecture 12019/02/26Introduction [slides] (video)

Guest Lecture (R103)[PyTorch Tutorial]

Lecture 22019/03/05Neural Network Basics [slides] (video)

Suggested Readings:

[Linear Algebra]

[Linear Algebra Slides]

[Linear Algebra Quick Review]

A12019/03/05A1: Dialogue Response Selection[A1 pages]

Lecture 32019/03/12Backpropagation [slides] (video)

Word Representation [slides] (video)

Suggested Readings:

[Learning Representations]

[Vector Space Models of Semantics]

[RNNLM: Recurrent Neural Nnetwork Language Model]

[Extensions of RNNLM]

[Optimzation]

Lecture 42019/03/19Recurrent Neural Network [slides] (video)

Basic Attention [slides] (video)

Suggested Readings:

[RNN for Language Understanding]

[RNN for Joint Language Understanding]

[Sequence-to-Sequence Learning]

[Neural Conversational Model]

[Neural Machine Translation with Attention]

[Summarization with Attention]

[Normalization]

A22019/03/19A2: Contextual Embeddings[A2 pages]

Lecture 52019/03/26Word Embeddings [slides] (video)

Contextual Embeddings - ELMo [slides] (video)

Suggested Readings:

[Estimation of Word Representations in Vector Space]

[GloVe: Global Vectors for Word Representation]

[Sequence Tagging with BiLM]

[Learned in Translation: Contextualized Word Vectors]

[ELMo: Embeddings from Language Models]

[More Embeddings]

2019/04/02Spring BreakA1 Due

Lecture 62019/04/09Transformer [slides] (video)

Contextual Embeddings - BERT [slides] (video)

Gating Mechanism [slides] (video)

Suggested readings:

[Contextual Word Representations Introduction]

[Attention is all you need]

[BERT: Pre-training of Bidirectional Transformers]

[GPT: Improving Understanding by Unsupervised Learning]

[Long Short-Term Memory]

[Gated Recurrent Unit]

[More Transformer]

Lecture 72019/04/16Reinforcement Learning Intro [slides] (video)

Basic Q-Learning [slides] (video)

Suggested Readings:

[Reinforcement Learning Intro]

[Stephane Ross' thesis]

[Playing Atari with Deep Reinforcement Learning]

[Deep Reinforcement Learning with Double Q-learning]

[Dueling Network Architectures for Deep Reinforcement Learning]

A32019/04/16A3: RL for Game Playing[A3 pages]

Lecture 82019/04/23Policy Gradient [slides] (video)

Actor-Critic (video)

More about RL [slides] (video)Suggested Readings:

[Asynchronous Methods for Deep Reinforcement Learning]

[Deterministic Policy Gradient Algorithms]

[Continuous Control with Deep Reinforcement Learning]

A2 Due

Lecture 92019/04/30Generative Adversarial Networks [slides] (video)

(Lectured by Prof. Hung-Yi Lee)

Lecture 102019/05/07Convolutional Neural Networks [slides]

A42019/05/07A4: Drawing[A4 pages]

2019/05/14BreakA3 Due

Lecture 112019/05/21Unsupervised Learning [slides]

NLP Examples [slides]

Project Plan [slides]

Special2019/05/28 Company WorkshopRegistration: [Google Form]

2019/06/04BreakA4 Due

Lecture 122019/06/11Project Progress Presentation

Course and Career Discussion

Special2019/06/18Company WorkshopRegistration: [Google Form]

Lecture 132019/06/25Final Presentation